ResearchHype Edit 1 Benchmark

Hype-Edit-1 introduces a new reliability benchmark for frontier image editing models, helping teams compare instruction-following and edit consistency.

- Sourceful Research

- Image Editing

- Benchmarks

- Reliability

Richard Allen

February 2, 2026

Sourceful Research is introducing our first image editing benchmark, Hype-Edit-1, designed to measure reliability in frontier image editing models. As editing capabilities advance, the gap between impressive demos and dependable, repeatable edits in real workflows keeps getting wider. Hype-Edit-1 aims to close that gap by providing a shared way to evaluate reliability across models.

What Hype-Edit-1 focuses on

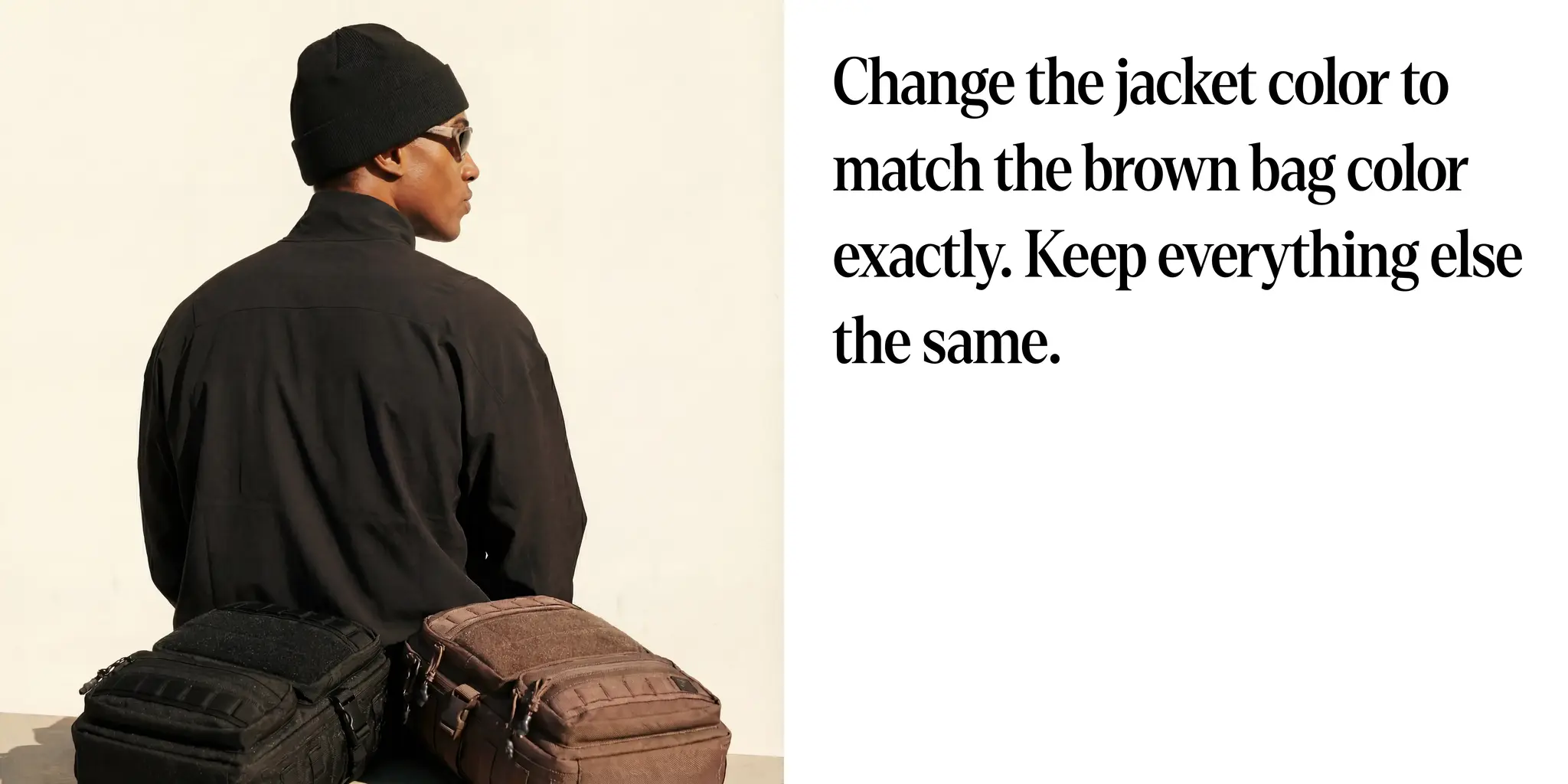

Hype-Edit-1 is built to evaluate whether models can follow editing instructions precisely while preserving everything that should remain unchanged. It emphasizes practical editing reliability over purely aesthetic outcomes, giving teams a clearer signal on how a model behaves in real-world editing workflows.

Crucially, it measures the difference between the capability of the model on a task versus its ability to do it reliably.

Results

Combined results (generated at 2026-01-22). 100 tasks, 10 images per task, 1,000 total images.

| Model | Pass rate | Pass@4 | Pass@10 | Expected attempts | Effective cost/success | Hype gap |

|---|---|---|---|---|---|---|

| riverflow-2-b1 | 82.7% | 90.5% | 93.0% | 1.40 | $0.66 | 6.0% |

| gemini-3-pro-preview | 63.8% | 79.9% | 87.0% | 1.85 | $0.95 | 17.0% |

| gpt-image-1.5 | 61.2% | 70.3% | 77.0% | 2.04 | $1.30 | 16.0% |

| flux-2-max | 45.7% | 63.8% | 75.0% | 2.38 | $1.41 | 25.0% |

| qwen-image-edit-2511 | 45.4% | 57.4% | 66.0% | 2.48 | $1.33 | 25.0% |

| seedream-4.0 | 35.6% | 57.4% | 72.0% | 2.64 | $1.42 | 38.0% |

| seedream-4.5 | 34.4% | 59.9% | 77.0% | 2.63 | $1.39 | 37.0% |

Benchmark design

Hype-Edit-1 combines a curated task set with a consistent evaluation protocol so results can be compared across labs, models, and release cycles. The benchmark is designed to be model-agnostic and to avoid overfitting to any single editing style or capability.

Evaluation protocol

Each task is well-defined and is focused on targeted edits instead of ambiguous, creative instructions. We evaluated using a panel of 5 humans who judged pass/fail without seeing which model generated the edit, and used majority voting to determine the result per task instance. We also provide example code for a VLM-based judge using Gemini 3 Flash Preview, which has around 80% agreement with humans. We've found that the VLM-based judge tends to be more strict than humans, picking up detail changes or color shifts that are barely perceptible to most humans.

Why reliability matters

For brand, packaging, and marketing assets, small inaccuracies can introduce costly rework. Reliability is what turns an image editing model from a creative toy into a dependable production tool. Hype-Edit-1 gives researchers and developers a common language to compare model behavior and to track progress over time.

Without a greater focus on reliability, the relationship between humans and AI remains uneasy due to a feeling of being an inconsistent work partner from image to image.

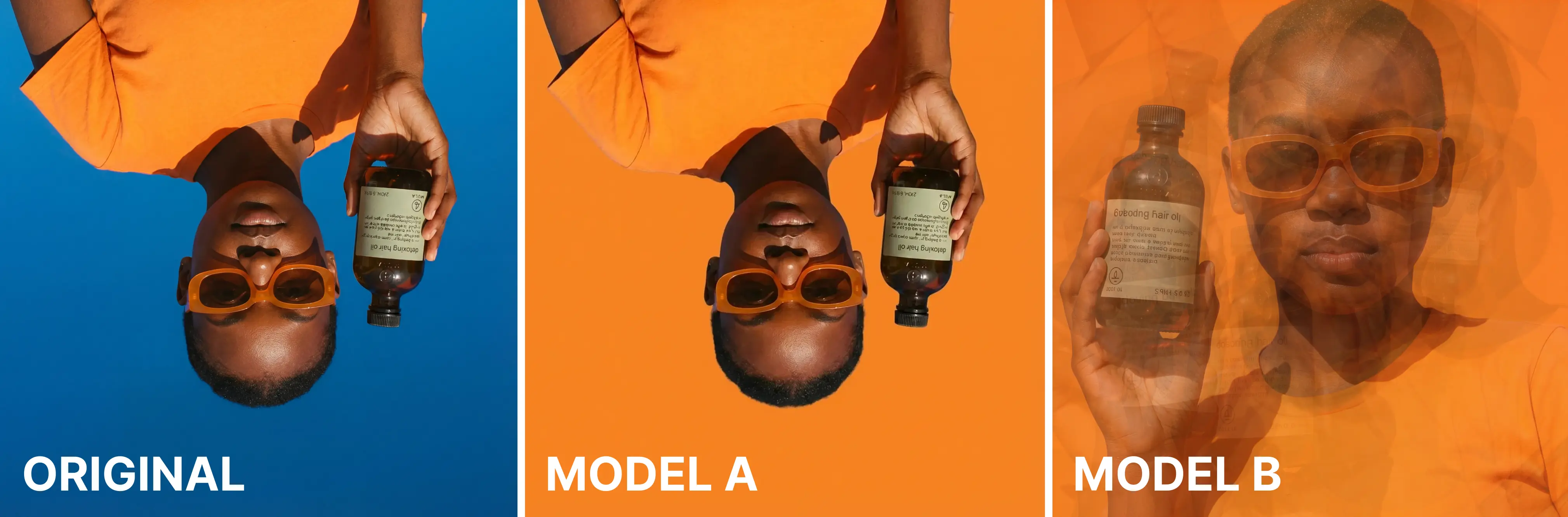

Example tasks

We split tasks into 4 categories: Change (50%), Remove (21%), Restructure (17%) and Enhance (12%).

Metrics

Reliability and effective cost

Let yt,k∈{0,1} denote whether the k-th attempt on task t is successful. With T tasks and K repeats, overall reliability is:

R=TK1t=1∑Tk=1∑Kyt,k.We report Pass Rate (P@1) as the probability of success on the first attempt and Pass@10 (P@10) as the probability of at least one success across the 10 attempts.

We report expected attempts and effective cost using the same retry-cap model implemented in our internal analysis script; the equations here allow reproduction.

Cost model

The benchmark cost model combines (1) the model's per-candidate generation cost, (2) an estimated human review cost per candidate, and (3) the expected number of attempts required for a task. For model m, the per-attempt cost is:

Cattempt=Cmodel(m)+Creview.We estimate review cost with a default hourly rate of $50 and 20 seconds of inspection time per image:

Creview=(50/3600)⋅20≈0.278.For each task t, we estimate a pass rate pt from the full K=10 candidates and compute the expected number of attempts with a maximum retry budget A=4 (reflecting a practical user retry limit). The expected attempts and success probability under this cap are:

Et={pt1−(1−pt)AAif pt>0,if pt=0,St=1−(1−pt)A.We then aggregate across tasks to compute pass@4 and effective cost per success using the retry-cap model:

P@4=T1t∑St,E=T1t∑Et. Ceff=P@4E⋅Cattempt.Hype Gap

We report the Hype Gap (Best-of-10 Uplift) as the difference, in percentage points, between Pass@10 and Pass@1. Let P@1 be the success rate on the first attempt and P@10 be the probability of achieving at least one success within 10 attempts. Then:

HypeGap=P@10−P@1.Lower Hype Gap indicates consistent performance between a model's typical outputs and its best-of-10 results, while a larger Hype Gap implies that strong edits are possible but less reliable in practical use.

Read the paper and code

- Paper: PDF

- Code and benchmark resources: GitHub repo

If you benchmark with Hype-Edit-1, we would love to hear your feedback and results.